Efforts to remove online hate speech have been imperfect and have raised concerns about freedom of expression. Better mechanisms, improved algorithms, and more transparency need to be put in place to deal with harmful content on social media.

Gunther Jikeli's research is centered on online and offline forms of contemporary antisemitism. He runs the Social Media & Hate research lab, which focuses on recognition of antisemitic speech and other forms of bigotry on social media. The lab also collaborates with Indiana University's Observatory on Social Media to improve identification of online antisemitism.

The challenge: Most of his students don't know anything about coding even very simple things or about how virtual machines work. Jikeli is teaching them to become data scientists who collect, store, and analyze data related to hate messages distributed on social media -- how prevalent is it? what are the common themes? who are the actors? what are the networks?

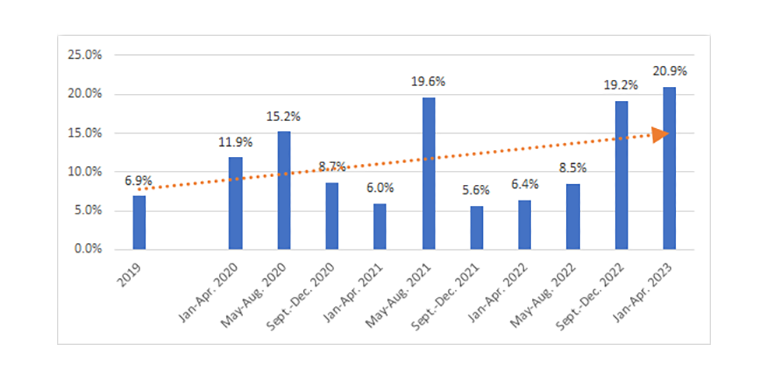

Over the course of three years, Jikeli's lab and UITS Research Technologies built a social media annotation portal to 1) gather data from social media like Twitter/X and TikTok; 2) classify and comment on content using an integrated annotation tool, so the process is less time consuming and error prone; and 3) generate meaningful samples that reflect what's trending. (Note: The portal is available for public use. Please feel free to test it out.)

Student teams use the portal to upload and comment on samples, then do basic statistical analysis that directs further research. Jikeli points out that this seemingly simple workflow relies on the advanced cyberinfrastructure (CI) behind it—on IU's research environment that comprehensively supports data acquisition, storage, management, visualization, and analysis.

While his students are generally unaware of the CI, Jikeli is keenly aware that this research would be cost prohibitive without it. Support from Research Technologies is also invaluable because it's time-consuming to get everything set up the way it should be. The portal and annotation tool were all built toward being able to do this.

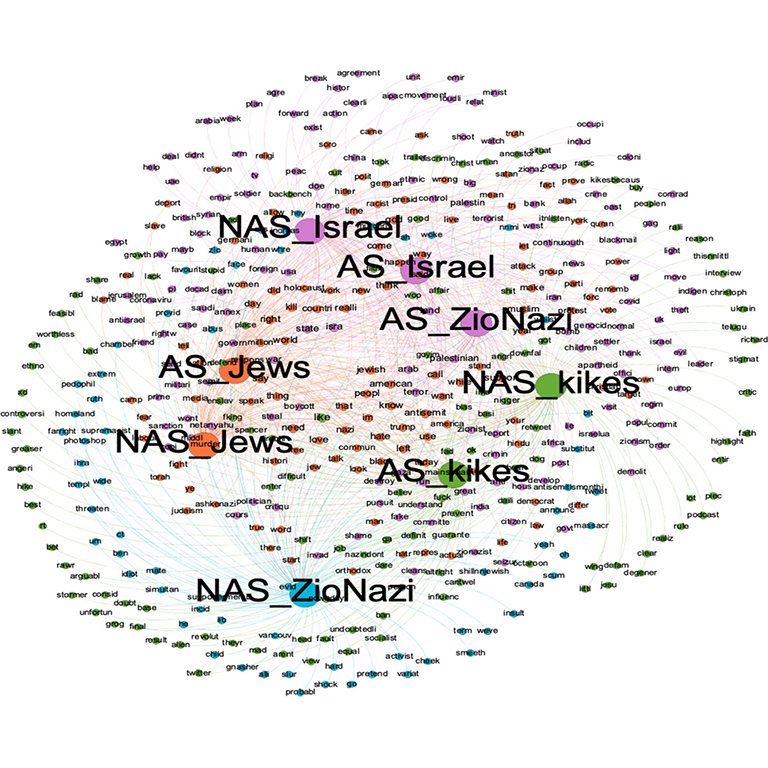

Students have been surprised by the amount of hateful content that's out there, but they have also come across a lot of people who are calling out the haters. That context is really important to take into account: Even the whistleblowers might be quoting hateful rhetoric.

By testing with ChatGPT and other generative AI tools, Jikeli's students quickly encounter the difficulties of classifying this as calling out (in other words, confronting) hate speech rather than engaging in it. The tools mistakenly treat the content as hate speech because they can't pick up on the context.

Jikeli uses this as a demonstration of the challenges of automatic detection. Social media platforms could do more to limit the reach of hateful and violent content if they put more resources into it. However, human judgement is still needed at some level.

He also stresses that it's not so much about deleting content as it is about downgrading it. If harmful content never makes it into people's social media feeds, then the impact is limited. Targeting the default algorithms behind the propagation of content could ultimately contain the spread.

This is a promising angle for Jikeli's students and research team to explore—how can we design a new form of automatic detection focused on reduction as opposed to removal of potentially hateful content?